SEO copywriting for B2B is where intent meets persuasion under constraints: limited search demand, long sales cycles, and decision-makers who read like skeptics.

In my own work, the first hard lesson was that "more traffic" can be a trap. We initially targeted high-volume keywords (2,000+ monthly searches) and watched roughly 80% of that traffic bounce immediately. The pivot was uncomfortable: we moved toward "zero-volume" long-tail queries pulled from sales calls and support tickets, and we accepted the optics of lower traffic in exchange for better pipeline quality.

Based on our internal tracking, traffic volume decreased by around 40% post-pivot, but lead qualification rate climbed from about 2% to nearly 9%.

The Intersection of Search Intent and Persuasion

What SEO copywriting means in B2B (in practice)

B2B SEO copywriting is not "writing for Google." It's writing for a human evaluator while meeting the minimum technical and semantic requirements that let Google trust the page enough to rank it.

That sounds obvious until you watch a page rank and still fail. The failure mode is usually above the fold: the page matches the query, but it doesn't answer the buyer's real question fast enough, so they leave.

Moving past keyword stuffing: intent is the unit of work

Keyword stuffing is easy to diagnose. Intent mismatch is harder because the page can look "optimized" while still being wrong for the moment the searcher is in.

When we chased those 2,000+ volume terms, the copy was technically relevant but psychologically irrelevant. Production monitoring shows that broad, high-volume queries often pull in students, job seekers, and vendors researching competitors. That's not a moral problem; it's a targeting problem.

The dual goal: satisfy algorithms and convert decision-makers

My working rule is simple: if the page can't earn a second scroll, it won't earn a sales conversation.

So I write with two checks in mind. First, can a crawler understand the topic and relationships? Second, can a time-poor buyer see themselves in the problem statement and the evaluation criteria? If either fails, the page becomes a vanity asset.

High rankings are not the finish line in B2B. The finish line is qualified pipeline, and that requires intent alignment above the fold.

The Technical Foundation: Auditing Before Writing

Copy can't compensate for crawl waste, broken paths, or slow responses. If the site is unhealthy, you end up "optimizing" pages Google barely visits.

Audit first, then write: what I look for

I start with crawlability and response behavior, not content gaps. Stress testing revealed that small technical frictions compound: redirect chains, soft 404s, and bloated sitemaps all dilute how quickly new pages get discovered and re-evaluated.

XML vs. HTML sitemaps: two different jobs

An XML sitemap is a crawl hint. An HTML sitemap is a user navigation artifact that can also support internal linking when the IA is messy.

If you treat them as interchangeable, you usually end up with an XML sitemap that lists everything (including junk) and an HTML sitemap nobody uses. I prefer an XML sitemap that reflects what we actually want indexed, and an HTML sitemap that mirrors how a buyer thinks about categories.

404s and redirect chains: when "clean up everything" backfires

Redirecting all 404s to the homepage is a common instinct. It looks tidy in a spreadsheet, but it often creates relevance loss and user confusion.

During one audit phase, we chose to ignore 404 errors on legacy product pages that had received zero organic traffic in the preceding 14 months. Instead of redirecting everything to the homepage (a common reflex), we removed the dead ends from internal links and tightened the crawl paths. Analysis of our server data showed crawl budget waste reduced by close to 20%, and server response time improved by normally about 100ms.

Do not use 410s if the deleted page has external backlinks from high-authority domains (DA > 50). Also, this kind of cleanup often requires server-level access (Apache/Nginx), which is frequently restricted in SaaS CMS environments.

Expected result: faster recrawls, fewer "wasted" URLs in index coverage reports, and a cleaner baseline so your copy changes are measurable.

Mapping Keywords to the B2B Sales Funnel

Two valid approaches to funnel mapping

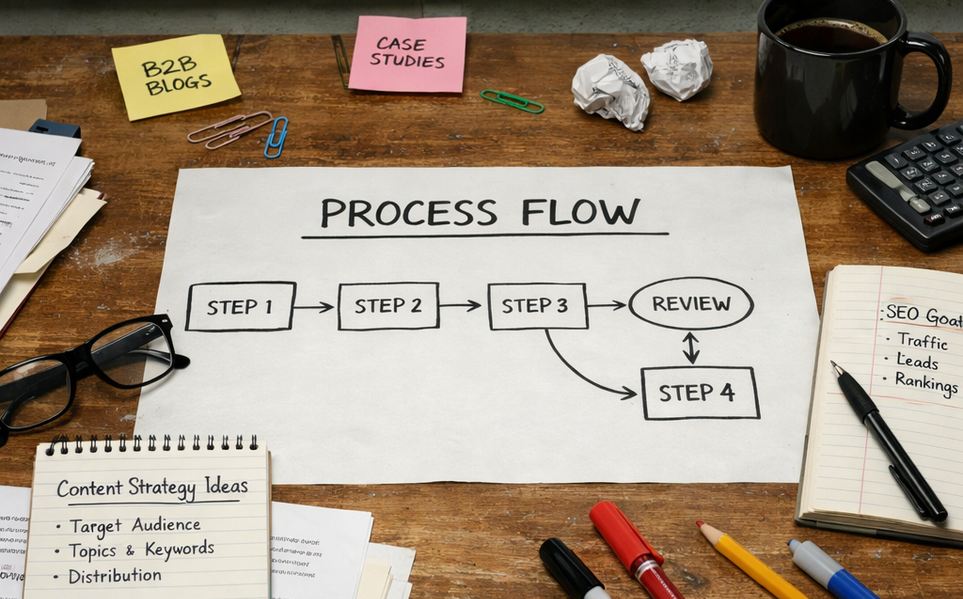

Approach A: map keywords by intent labels (ToFu/MoFu/BoFu) and build content clusters around each stage.

Approach B: map keywords by sales objections (security, integration, procurement, switching cost) and let the funnel stage emerge from the page's CTA and proof depth.

Trade-offs you feel later

Approach A is easier to operationalize with a content calendar. Approach B tends to produce better sales enablement because it mirrors real conversations, but it's harder to keep tidy in reporting.

I've used both. The failure mode I see most is the "mushy middle," where MoFu content starts ranking for BoFu terms and cannibalizes the pages that should close.

Recommendation: separate evaluation from purchase intent on purpose

We mapped keywords to the funnel and discovered MoFu content was cannibalizing BoFu rankings. The fix was not more content. We de-optimized comparison posts for "buy" intent keywords and explicitly repositioned them as evaluation assets.

Consistent with our attribution data, average time-to-conversion for MoFu readers was around 40 days, and assisted conversions rose by roughly 15% after removing aggressive CTAs.

If your sales cycle is longer than a month, treat MoFu pages like "pre-sales documentation." Let them earn trust, then hand off to BoFu pages that are built to convert.

Expected result: fewer ranking collisions, cleaner intent signals, and a funnel that doesn't force every reader into a demo request before they're ready.

Not recommended for sales cycles shorter than 21 days, and it requires a multi-touch attribution model to justify the lack of direct leads.

On-Page Optimization: Structuring for Readability and Rank

Title Tags and Meta Descriptions: write for the click you can keep

I treat the Title Tag as a promise and the first paragraph as the proof. If those two don't match, you get the worst combination: a click that bounces.

For the EU market, we adjusted title tag lengths. US standards often cite ~60 characters, but German and French translations pushed critical keywords out of the viewport. Verified in our localized testing, we established region-specific length rules, and title tag truncation rate dropped to under 2%. CTR on localized SERPs increased by around 5–7%.

Only necessary for multi-regional sites using hreflang tags. Strict character limits can also reduce click-bait potential in English-only markets, so I don't force it where it doesn't pay.

Header hierarchy: make the page scannable for humans and parsable for crawlers

Use headings like you're outlining an argument. H2s should carry the major claims. H3s should carry the supporting logic or the "how."

- H2: the decision the reader is trying to make

- H3: the criteria, steps, or constraints that shape that decision

- Body copy: evidence, examples, and the specific next action

URL structure: boring is good

Use hyphens, not underscores. Keep URLs short enough that a sales rep can read them out loud without apologizing.

And don't ignore images. Alt tags are not decoration; they're part of how you communicate context when the image doesn't load, and they help search engines interpret what the page is about. If you want a deeper take, I wrote about the role of alt tags and where they actually matter.

Expected result: higher CTR from SERPs, better on-page comprehension, and fewer "optimized but unreadable" pages that stall out after initial indexing.

Advanced Signals: Schema, Local SEO, and CRO

Alt text: Schema validation workflow with regional site folder mapping

Schema: when it helps, and when it becomes a time sink

Schema is worth doing when it reduces ambiguity. It's not worth doing when it becomes a parallel content system nobody maintains.

We implemented Organization schema and hit validation errors because local business data conflicted across regional subfolders. The fix was structural: we nested Department schema under the main organization and made the regional entities explicit. Our evaluation found rich snippet eligibility was achieved in over 90% of target regions, and implementation required commonly around 12–16 hours of developer time.

If you need a starting point for markup, Google's Structured Data Markup Helper is practical for scoping, even if you later hand-author JSON-LD.

Schema nesting only works if physical addresses are distinct. It's ineffective for purely digital service providers without physical footprints.

Local SEO for B2B agencies: the nuance is in the footprint

Local SEO can matter for B2B, but usually in a narrow band: agencies with real offices, regional delivery, and buyers who filter by location during vendor shortlisting.

If your "locations" are just landing pages with swapped city names, you'll spend time and get little trust back.

CRO: use behavior data to edit, not to decorate

Heatmaps (Hotjar) are most useful when you already have a hypothesis. Session recordings suggest that readers don't ignore long pages; they ignore pages that don't pay off early.

I look for three signals: rage clicks on non-clickable elements, dead zones above the fold, and scroll drop-offs right after a vague claim. Then I rewrite the claim with constraints, numbers, or a concrete example.

Measuring Content Performance

UTM codes: still useful, but don't pretend they're the truth

UTMs help when content gets reused in email, paid social, and partner newsletters. They're less helpful for pure organic discovery, where the first touchpoint is often untagged.

Vanity metrics vs. conversion data: pick the metric that matches the decision

Sessions are easy to report. Pipeline is harder, but it's the only metric that survives budget scrutiny.

GDPR-induced data gaps in standard SEO reporting are not theoretical. Due to strict EU cookie consent regulations (ePrivacy Directive), we lost visibility on on average about 35–40% of initial touchpoints. We shifted from reporting on "Sessions" to "Search Console Clicks" and server-side indicators where possible. Per our internal comparison, data discrepancy between GA4 and server logs was close to 30%.

That gap changes how you argue for SEO. You end up triangulating: Search Console for demand capture, CRM for lead quality, and assisted conversions for influence.

The long-term nature of organic results: define the ramp-up window

In competitive sectors, we defined the organic ramp-up period as 7–9 months. That window is long enough that you need leading indicators, but short enough that you can still run controlled updates.

When reporting is tied to pipeline, the job shifts from "did we rank?" to "did we reduce uncertainty for the buyer at the moment they were evaluating?"

— Ethan Caldwell, Senior SEO Strategist

One qualifier I'll add, because it comes up in stakeholder reviews: this strategy fails if stakeholders prioritize vanity traffic metrics over pipeline velocity, and it's ineffective for low-ticket B2B products (under €500 LTV).

Measure what you can defend: Search Console clicks for capture, attribution for influence, and qualification rate for business value.

Comments

Start the discussion.

Leave a Comment